Nvidia has been slowly refreshing their product stack for about six months now as they have been introducing their new RTX 2000 series of cards. Well, we are finally getting down into the sweet spot and today they are introducing the replacement to the always popular GTX 1060. But what may come as a surprise to some of you is that replacement isn’t going to be an RTX card. Nvidia’s new card is still Turning based, but with this launch, they have dropped the Tensor and RT cores that make an RTX card so this launch is a GTX card. Today they are launching the GTX 1660 Ti. I’m not going to pretend to understand the reasoning for the move away from the 2000 series numbering, but today I’m going to dive into what is different with the GTX 1660 Ti and then check out the MSI GTX 1660 Ti Ventus XS and see how it performs.

Product Name: MSI GTX 1660 Ti Ventus XS

Review Sample Provided by: MSI

Written by: Wes Compton

Pictures by: Wes Compton

Amazon Affiliate Link: HERE

GTX 1660 Ti WUT?

I’m sure a few of you are sitting here saying WTF is a GTX 1660 Ti, why isn’t it an RTX card. So many questions!! Well, I kind of touched on it in the opening, but the reason for going back to the GTX from RTX is because Nvidia didn’t include what makes an RTX card and RTX card on this one. That would be the ray tracing cores and the Tensor cores used for AI. Don’t panic though, this isn’t a rebranded design like sometimes happens. The GTX 1660 Ti is still based on the Turing architecture, they just made a few tweaks. Dropping the RTX and Tensor cores was inevitable, you can tell just by looking at the die sizes of all of the RTX cards. I spoke about it a lot in my early RTX reviews, but huge die sizes to support a full traditional GPU as well as Tensor and RT cores makes for a huge die and large die sizes cost a lot of money. Not only that, but the ray tracing capabilities as Nvidia dropped down to cards like the RTX 2060 get lower and at some point, there is no point in including them if turning ray tracing on will make the game unplayable.

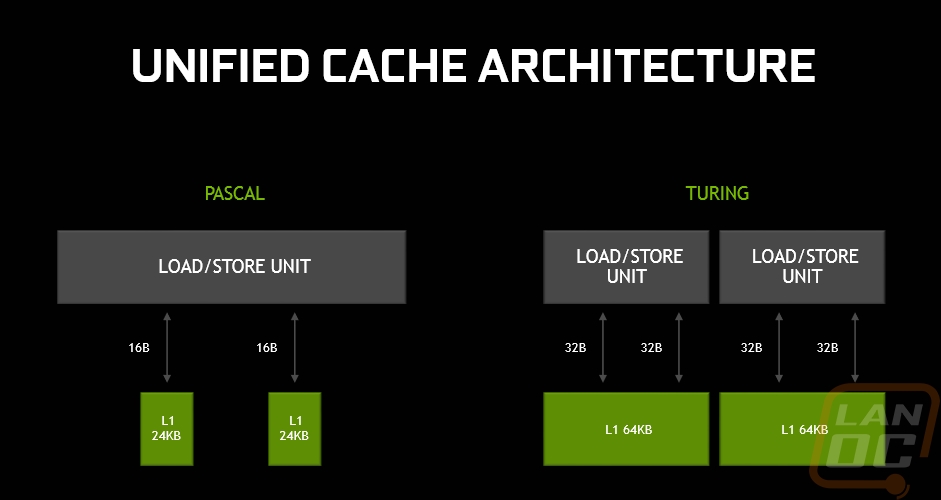

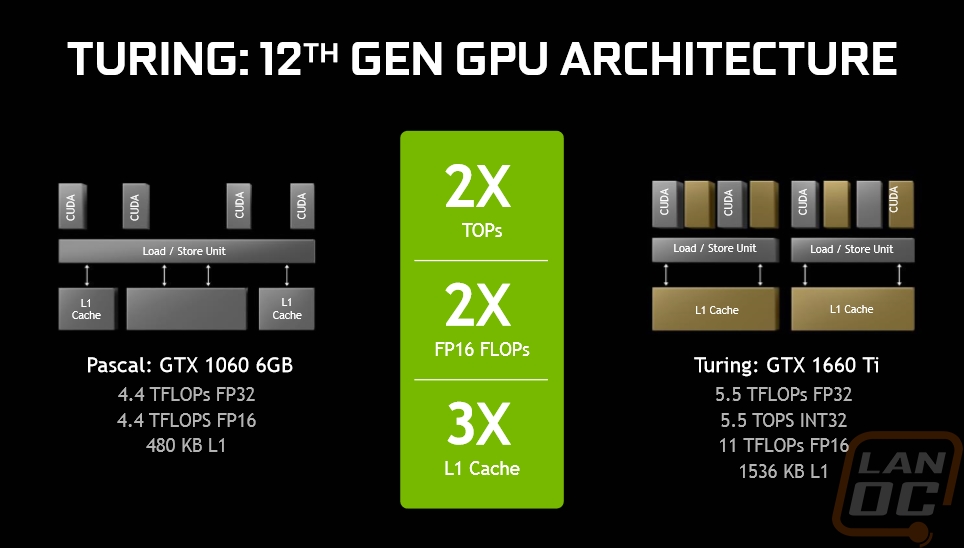

The real question though is what features that were introduced with Turning are staying with this new GPU because that is what will set this apart from the Pascal-based GPUs. A big one is the new unified cache architecture. This is big in accelerating shader processing. Turing combines two SMs cache together while also increasing the overall L1 cache size.

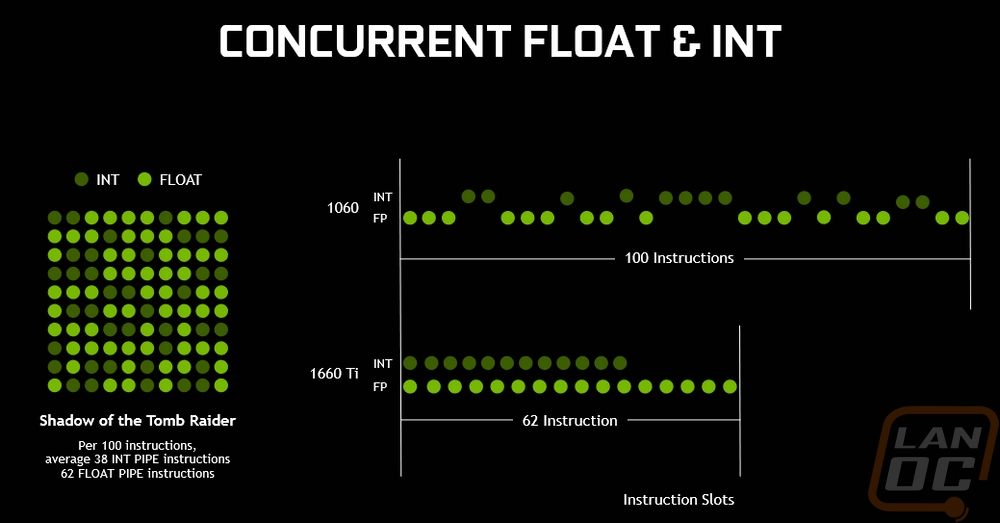

Turning also changes concurrent float and integer in FP32 and INT32. Basically with Pascal things would stall when an INT is calculated but now they can run concurrently. They can run different data paths at the same time significantly speeding things up. In the example that Nvidia provides you can see that with the GTX 1060 something that would take 100 instructions can now be done in 62 because it can run both at the same time, not one at a time.

Turning also changes concurrent float and integer in FP32 and INT32. Basically with Pascal things would stall when an INT is calculated but now they can run concurrently. They can run different data paths at the same time significantly speeding things up. In the example that Nvidia provides you can see that with the GTX 1060 something that would take 100 instructions can now be done in 62 because it can run both at the same time, not one at a time.

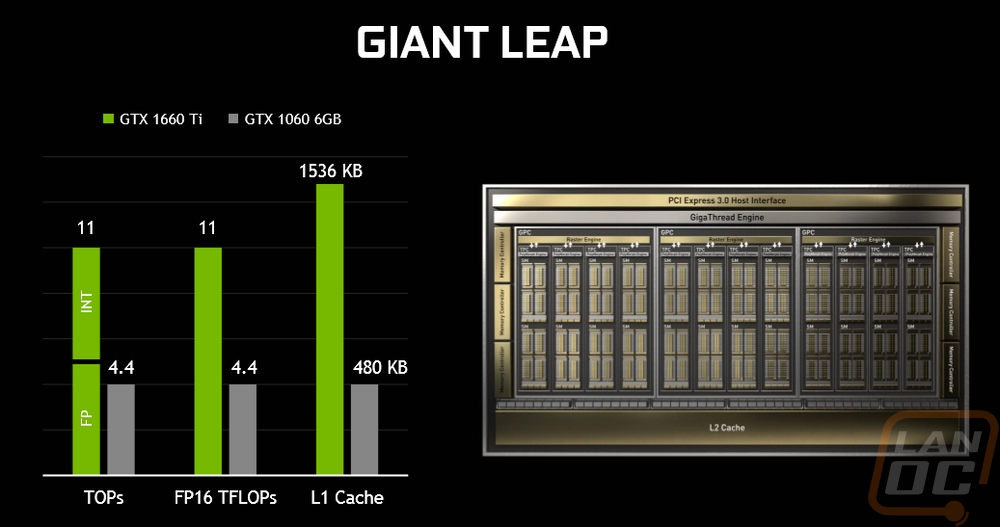

Nvidia spent a lot of time comparing the GTX 1660 Ti with the GTX 1060 because that is what they are replacing and on paper at least it does look like a good bump in performance. There are now twice as many TOPs and FP16 FLOPs and three times as much L1 Cache. Adding independent INT32 calculations is great and you can see that with the 5.5 TOPS in it, but its also important to note that even if everything was still running on the FP32’s that alone has seen a bump up from 4.4 to 5.5 TFLOPs aka teraflops with FLOPs being floating point operations per second. That is a crazy amount of processing in one second, it takes me all afternoon to get around to putting the dishes in the dishwasher! For reference (on the card FLOPS not my extreme laziness) the GTX 980 did 5 TFLOPs and the GTX 780 did 4 TFLOPs. A lot of people who get on board with the upgrade every two generations might be coming from the GTX 960 would be moving from 2.4 TFLOPs.

Nvidia spent a lot of time comparing the GTX 1660 Ti with the GTX 1060 because that is what they are replacing and on paper at least it does look like a good bump in performance. There are now twice as many TOPs and FP16 FLOPs and three times as much L1 Cache. Adding independent INT32 calculations is great and you can see that with the 5.5 TOPS in it, but its also important to note that even if everything was still running on the FP32’s that alone has seen a bump up from 4.4 to 5.5 TFLOPs aka teraflops with FLOPs being floating point operations per second. That is a crazy amount of processing in one second, it takes me all afternoon to get around to putting the dishes in the dishwasher! For reference (on the card FLOPS not my extreme laziness) the GTX 980 did 5 TFLOPs and the GTX 780 did 4 TFLOPs. A lot of people who get on board with the upgrade every two generations might be coming from the GTX 960 would be moving from 2.4 TFLOPs.

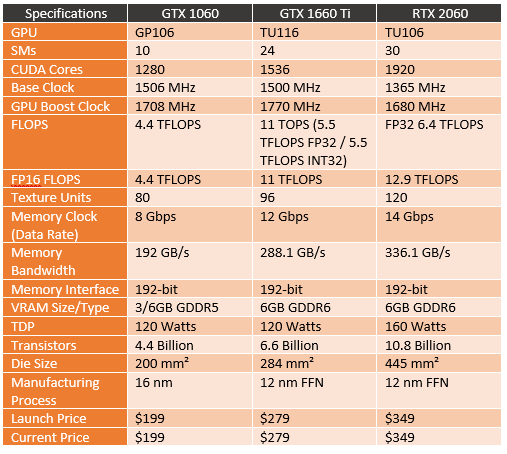

So let's take a look at the specifications and for reference in where the GTX 1660 Ti fits in with everything I have both the GTX 1060 and the RTX 2060 here as well. For starters, officially the GTX 1660 Ti does have a new GPU designation, the TU116. It has 6 fewer SMs than the RTX 2060. With that, the number of CUDA cores for the 1660 Ti is 1536 which is 256 more than the GTX 1060 but also 384 less than the RTX 2060. Interestingly though the GTX 1660 Ti has a much higher base clock and boost clock, similar to the clock speed on the GTX 1060. The GTX 1660 Ti’s memory is running at 12 Gbps which is a little lower than the other Turning GPUs but still faster than any of the Pascal GPUs, especially the GTX 1060 which launched with 8 Gbps memory and later saw a new version with 9 Gbps memory that I think a lot of people forget about. The memory interface is still the same across all three with the 192-bit interface and at least right now the GTX 1660 is available with 6Gb of memory just like the RTX 2060. The GTX 1060 did have a 3GB model as well. Speaking of the memory, it is now GDDR6 not the GDDR5 of the GTX 1060. Dropping the RT and Tensor cores helped with the TDP a lot, as did lowering the number of SMs. The GTX 1660 Ti has a TDP of 120 watts, matching the same TDP on the GTX 1060.

So let's take a look at the specifications and for reference in where the GTX 1660 Ti fits in with everything I have both the GTX 1060 and the RTX 2060 here as well. For starters, officially the GTX 1660 Ti does have a new GPU designation, the TU116. It has 6 fewer SMs than the RTX 2060. With that, the number of CUDA cores for the 1660 Ti is 1536 which is 256 more than the GTX 1060 but also 384 less than the RTX 2060. Interestingly though the GTX 1660 Ti has a much higher base clock and boost clock, similar to the clock speed on the GTX 1060. The GTX 1660 Ti’s memory is running at 12 Gbps which is a little lower than the other Turning GPUs but still faster than any of the Pascal GPUs, especially the GTX 1060 which launched with 8 Gbps memory and later saw a new version with 9 Gbps memory that I think a lot of people forget about. The memory interface is still the same across all three with the 192-bit interface and at least right now the GTX 1660 is available with 6Gb of memory just like the RTX 2060. The GTX 1060 did have a 3GB model as well. Speaking of the memory, it is now GDDR6 not the GDDR5 of the GTX 1060. Dropping the RT and Tensor cores helped with the TDP a lot, as did lowering the number of SMs. The GTX 1660 Ti has a TDP of 120 watts, matching the same TDP on the GTX 1060.

Then we have pricing and if you read the specs above this you already know. The GTX 1660 Ti is launching at $279. The RTX 2060 comes in at $349. Then the GTX 1060 launched at $199 and surprisingly enough, after all, fo the mining craziness it is back down to that same price. So some will be disappointed that the GTX 1060 replacement is now $80 more. That is more than just a bump for inflation, but we will have to wait to see the performance to get a real idea of if the GTX 1660 Ti is a good value or not.

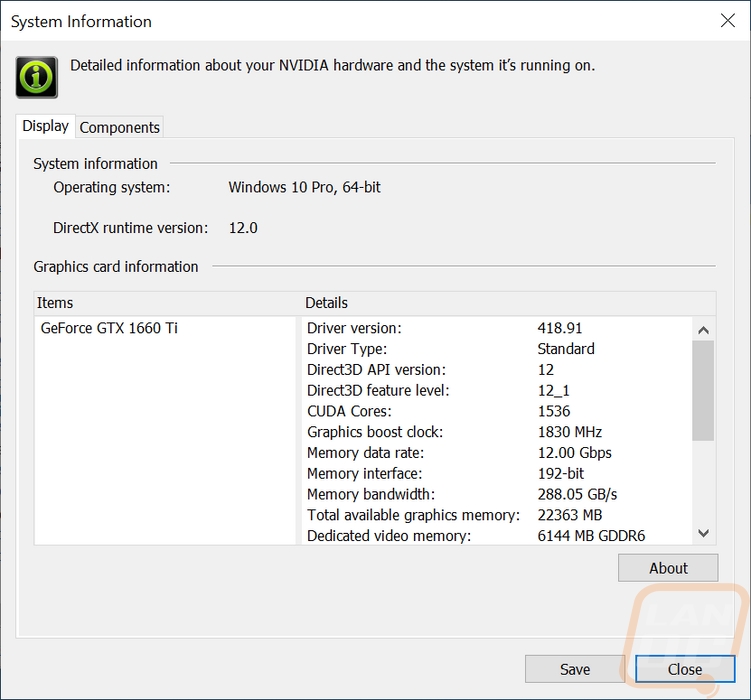

Speaking of, I should mention the card I am testing. Unlike all of the RTX launches this launch doesn’t have a Founders Edition card. So clock speeds, for example, are only a suggestion. Even at the opening price point of $279 it is possible to see overclocked cards. The MSI GTX 1660 Ti Ventus XS that I am testing is an overclocked card. It has a boost clock of 1830 MHz listed which is a bump over the 1770 MHz listed in the GTX 1660 Ti specifications. I would normally confirm with GPUz that the specifications match but I can’t, not to mention GPUz had a bug that wouldn’t show our boost clock speed. So I double checked with the Nvidia Control Panel that we are getting 1830 MHz and as you can see we have the 418.91 driver and while it says standard, this is the pre-launch driver.