You have all seen and heard the rumors for months now about Nvidia’s upcoming GPU code named Kepler. When we got the call that Nvidia was inviting editors out to San Francisco to show us what they have been working on we jumped at the chance to finally put the rumors to rest and see what they really had up their sleeve. Today we can finally tell you all the gory details and dig into the performance of Nvidia’s latest flagship video card. Will it be the fastest single GPU card in the world? We finally find out!

You have all seen and heard the rumors for months now about Nvidia’s upcoming GPU code named Kepler. When we got the call that Nvidia was inviting editors out to San Francisco to show us what they have been working on we jumped at the chance to finally put the rumors to rest and see what they really had up their sleeve. Today we can finally tell you all the gory details and dig into the performance of Nvidia’s latest flagship video card. Will it be the fastest single GPU card in the world? We finally find out!

Product Name: Nvidia GTX 680 Kepler

Review Sample Provided by: Nvidia

Review by: Wes

Pictures by: Wes

Kepler what’s it all about

During editors day Nvidia opened things up by boldly stating that the new GTX 680 will be the most powerful and most efficient card on the market. They went on soon after to bring out Mark Rein, Vice President of Epic Games to show off a demo that they made last year called the Unreal Samaritan Demo. Last year when the demo was originally shown they required a three GTX 580 powered rig to show it off. While playing the demo he pointed out that that same demo is now running great on just one card, the Kepler based GTX 680. Obviously the GTX 680 isn’t three times as powerful as a single GTX 580, but throughout the day they went over all of the pieces that fit together to help them realize that performance increase.

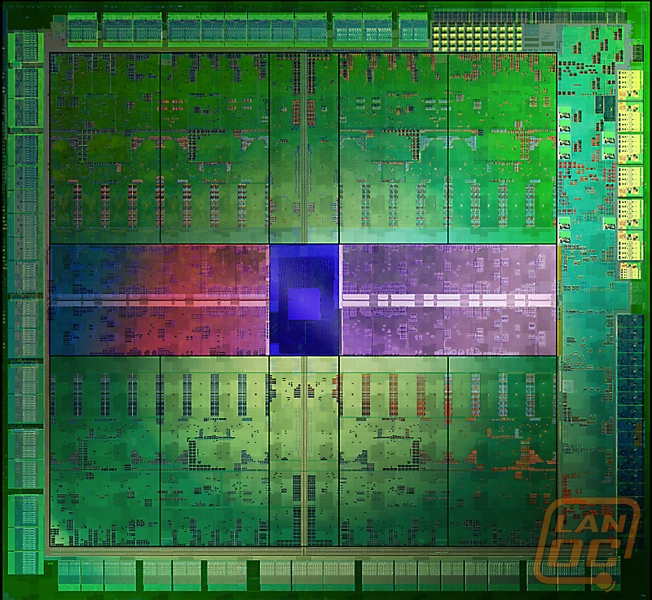

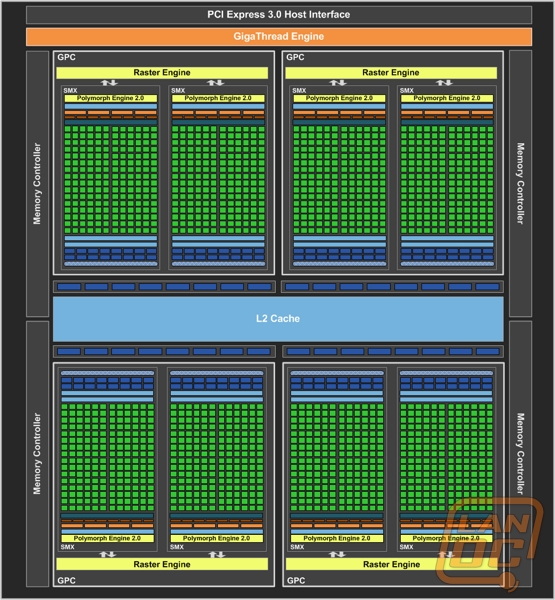

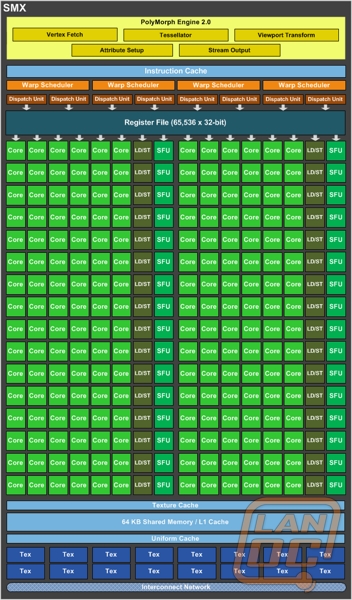

First let’s jump into the hardware itself because that is obviously a big part of the performance increase. At the core of the GTX 580 there were 16 Streaming Multiprocessor’s (SM), each having 32 cores. With the GTX 680 Nvidia has moved to what they are calling the SMX. Each SMX has twice the performance/watt when compared to the GTX 580 and each has 192 cores. Now the GTX 680 has a total of 8 SMX multiprocessor’s making for a total of 1536 Cores!

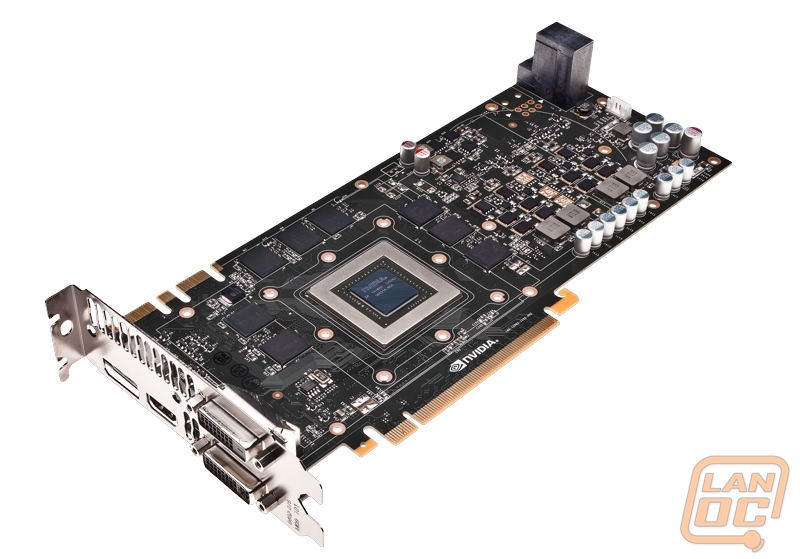

Like I mentioned before they said the GTX 680 would be a very efficient card considering its performance. That was obvious as soon as they posted up its new TDC of 195 Watts, sporting just two six pin connections. For those who are counting that’s 50 watts less than the GTX 580 and around 20 less than the HD 7970.

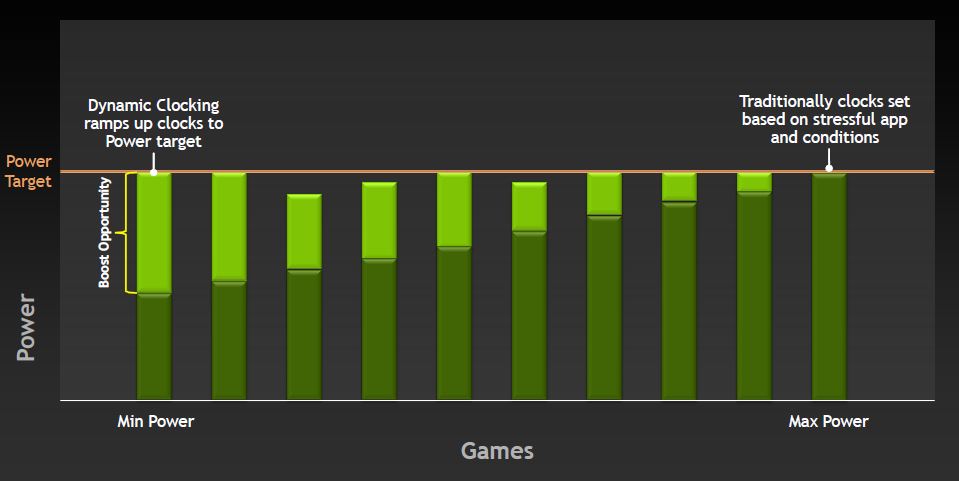

It’s clear that Nvidia took a look at what Intel has been doing on the CPU side of things when creating the GTX 680. Kepler introduces a new Nvidia technology called GPU Boost. This is similar to what Intel’s Turbo mode is, but with a different approach. The idea is that some games end up requiring less actually wattage to compute than other games. In the past they have set their clock speed by the maximum power usage in the worst case application. With GPU boost the GPU is able to notice that its being underutilized and it will boost core clock speed and on a lesser level memory clock speed. You shouldn’t look at this as overclocking, because you can still overclock the GTX on your own. GPU boost runs all of the time no matter what meaning you can’t turn it off, even if you would like to. When you are overclocking in the future you will actually being changing the base clock speed, GPU boost will still be on top of that. For those that are wondering, GPU Boost polls every 100ms to readjust the boost. This means if a game gets to a demanding part you’re not going to have your card crash from pulling too much voltage.

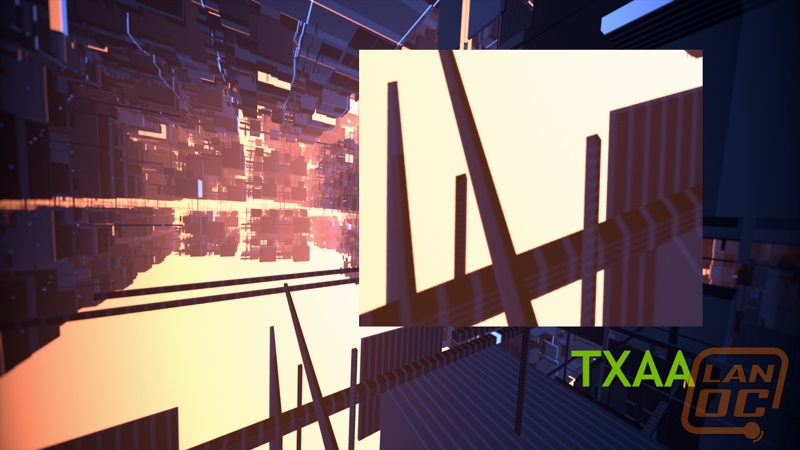

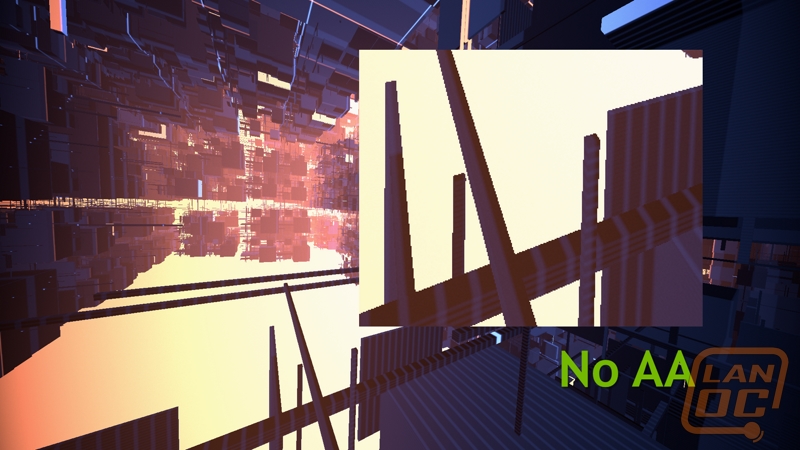

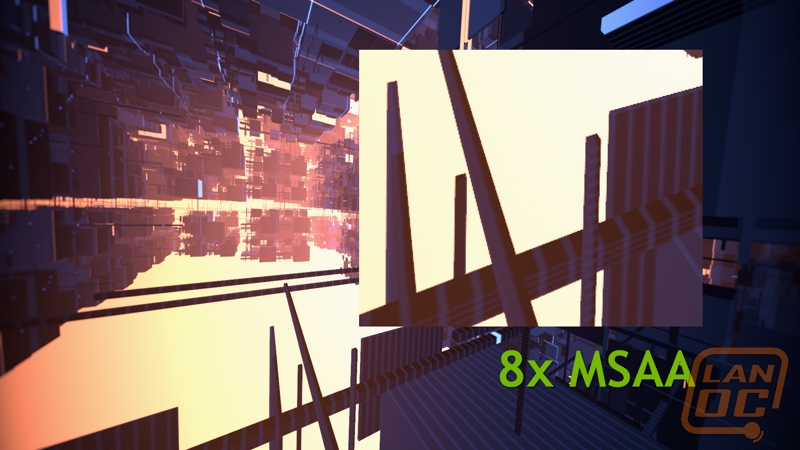

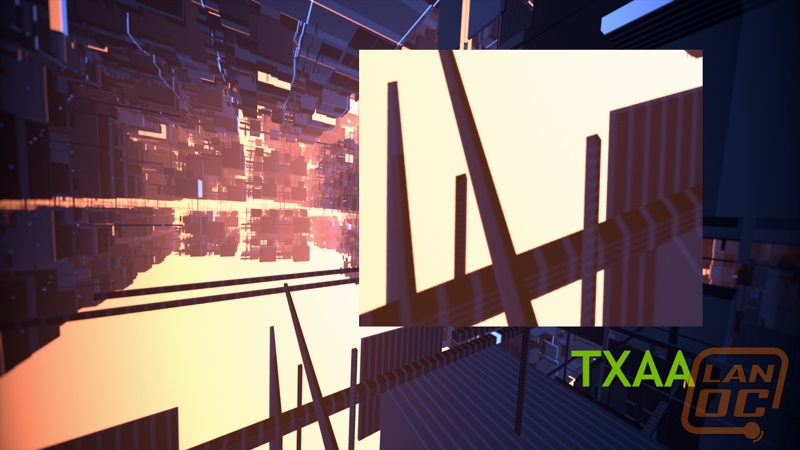

Like I said before, more powerful hardware are only part of the picture when it comes to Unreal’s demo system going from three GTX 580’s to one GTX 680. Nvidia spend a lot of time working on FXAA and their new TXAA Anti-Aliasing techniques. In the demo mentioned they went from using MSAA 4x to FXAA 3. Not only did they see better performance but it actually did a better job smoothing out the image. I’ve included the images below for you guys to see, I think you will agree.

As I mentioned before on top of talking about FXAA Nvidia launched a new Anti-Aliasing called TXAA. TXAA us a mixture of hardware anti-aliasing, custom CG film style AA resolve, and when using TXAA 2 an optional temporal component for better image quality. By taking away some of the traditional hardware anti-aliasing TXAA is able to look better than MSAA while performing better as well. For example TXAA 1 performs like 2x MSAA but looks better than 8xMSAA.

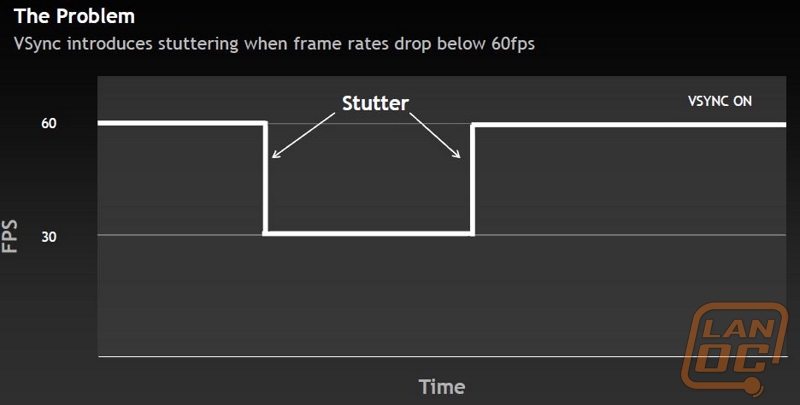

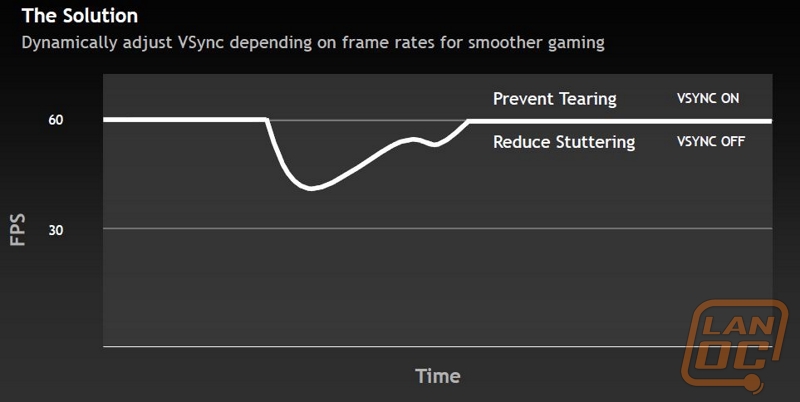

Another feature being implemented with Kepler is one called Adaptive V Sync. This is designed to better your gaming experience. One complaint that people have with high end GPU’s is screen tearing. One way to prevent that is to turn on VSync. But the downside to this is when you drop below 60PFS it will force you to 30FPS, this causes a major hiccup and slowdown when the GPU may be able to actually give you 59 FPS. Adaptive VSync watches for this and turns VSync off whenever you drop below 60 FPS making the transition smoother.

On top of all of those important features that improve your gaming experience there are a few other smaller things introduces also that I would like to quickly go over. First, 2D surround now only requires one GPU to run three monitors. This means you don’t need to run and pick up a second card just to game with three monitors, as far as performance goes, it will still depend on what you’re playing. To go along with this they are also introducing 3+1. This is a setup that allows you to run a fourth monitor above your triple monitors. You can run this while still gaming on the three, this is perfect for watching notifications or TV shows during slow periods of your gaming.

Other surround improvements include finally moving the task bar to the middle monitor, something that should have been done from the start. You can now also maximize windows to a single monitor also. A small tweak added now allows you to turn off the bezel adjustment on the fly when running multiple monitors; this is great if you are running your macros in wow across two monitors for example, you won’t miss out on anything.

Lastly they also have tweaked the GPU’s performance to be a little more optimized when you are running a single monitor game on one of your triple monitors.